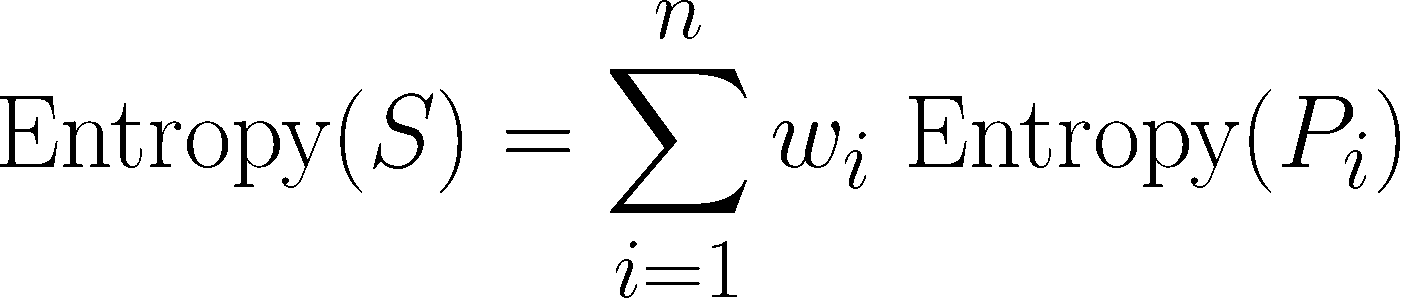

Information gain = entropy(parent) - * entropy(child) The formula for information gain can be represented as: Information gain is determined by subtracting the entropy of the parent node from the weighted average of the entropies of the child nodes. Information gain is used to determine which feature to split on at each internal node, such that the resulting subsets of data are as pure as possible. It is based on the concept of entropy, where entropy is the measure of impurity in a dataset.Įach decision tree node represents a specific feature, and the branches stemming from that node correspond to the potential values that the feature can take. Information gain is a measure used in decision trees to determine the usefulness of a feature in classifying a dataset. Finally, we calculate the accuracy of the classifier using the accuracy_score function from scikit-learn and plot the resulting decision tree using the plot_tree function and matplotlib. The classifier is fitted to the training data and used to predict the classes of the testing set. We then create a decision tree classifier using entropy as the criterion for splitting the dataset. In this example, we first load the iris dataset and split it into training and testing sets. # Calculate the accuracy of the classifierĪccuracy = accuracy_score(y_test, y_pred) # Fit the classifier to the training data # Create a decision tree classifier with entropy as the criterionĭtc = DecisionTreeClassifier(criterion='entropy') X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Split the dataset into training and testing sets Decision trees are easy to understand and interpret, and they are widely used in applications such as fraud detection, customer relationship management, and medical diagnosis.įrom ee import DecisionTreeClassifier, plot_treeįrom sklearn.model_selection import train_test_splitįrom trics import accuracy_score The tree is constructed by recursively partitioning the data into subsets based on the feature values until a stopping criterion is met. Decision trees are useful for handling complex datasets and making decisions based on a set of rules or conditions. It is a tree-like model with decision nodes representing tests on particular features and leaf nodes representing the outcome of a classification or regression task. What is a Decision Tree in Machine Learning?Ī decision tree is a popular algorithm in Machine Learning used for classification and regression tasks. In decision trees, entropy is used to determine the best split at each node and improve the overall accuracy of the model. This formula is used to calculate the level of disorder or uncertainty in a given dataset, and it is an essential metric for evaluating the quality of a model and its ability to make accurate predictions. Where p is the probability of each possible outcome in a dataset or system.

0 kommentar(er)

0 kommentar(er)